Introduction to the Finite Element Method (FEM)#

The evolution of a physical quantity in time and space can usually be described in terms of partial differential equations (PDEs). But most of the time, such PDEs cannot be solved with analytical methods. Various types of discretization and numerical methods can be employed to construct an approximation of the original problem, defined as a numerical model. The finite element method (FEM) is one way to compute such approximations.

The Poisson equation#

Let’s start with a reference in the field of scientific computing : the heat equation, which models how a quantity such as heat (denoted as \(u\)) diffuses through a given region \(\Omega\)

Imagine a 1D stick illustrated as the interval \([0, 1]\) being held at a fixed temperature \(u(0)=0\) at one end, and \(u(1)=1\) at the other. Initially, there will be some variation in temperature, but after some time the rod will reach a steady-state temperature distribution, making time derivative \(\frac{\partial u}{\partial t}\) negligible. The Laplacian term \(\Delta u\) reads as \(\Delta u = \frac{\partial^2u} {\partial x^2}\) in 1D, which leads to

also known as the Poisson equation, very useful and very popular as a first step when learning about the finite element method. The concepts involved in solving the Poisson equation generalise very neatly to more complicated equations and higher dimensions.

For brevity, we write derivatives with a subscript, so the second derivative with respect to \(x\) is \(u_{xx}\).

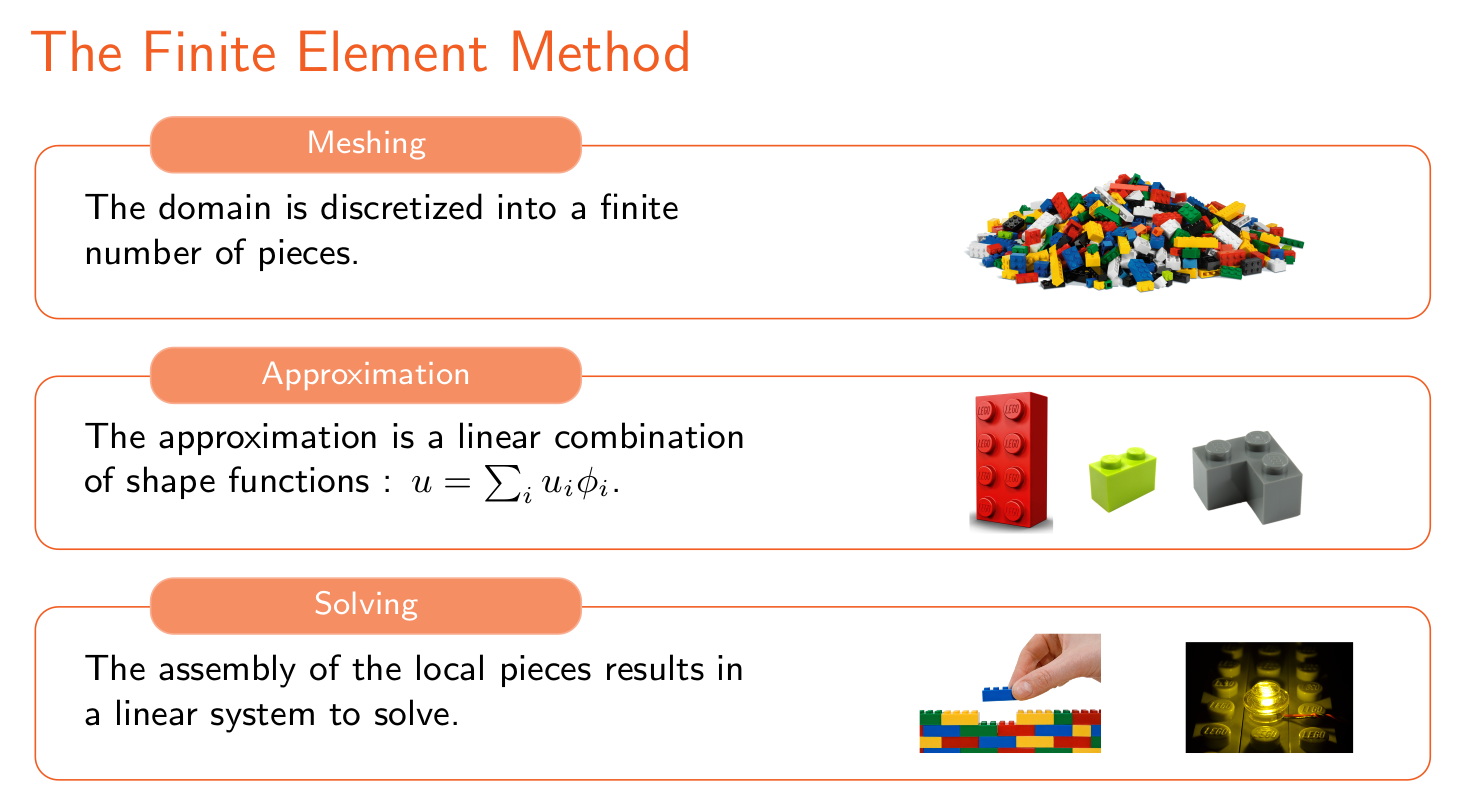

Step 1 - The mesh#

Before jumping in the mathematical details, let’s start with the finite element discretization. Despite the enormous progress in terms of computer power, computers are still limited to solving a finite number of equations. We then need to discretize our problem in space (discretization of our domain \(\Omega\)), and in time when considering time dependent problems. The discretization in space consists in the subdivision of \(\Omega\) into a (finite) set of “bricks” which we call a mesh.

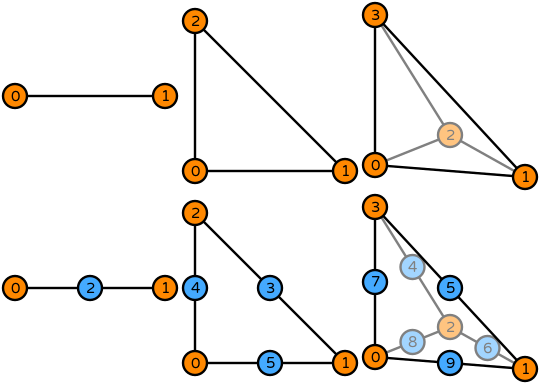

The interval domain \(\Omega = [0,1]\) can be easily discretized by choosing \(N = 6\) points \([x_1, \dots, x_{N}]\) distributed uniformly in the domain, of which the first point is \(x_1=0\) and the last point is \(x_6=1\). The mesh then consists in the 5 intervals that comes out of this. We denote the distance between two adjacent mesh points by \(h=\frac 1 {N-1}\).

Step 2 - The weak form#

The previously introduced Poisson equation written as previously is called strong form, of which \(u\) is the strong solution. A characteristic feature of the Finite Element Method (FEM) approach is the reformulation of this form into an equivalent form called weak form. The interest of such a formulation resides in the usability of real and functional analysis concepts as the Hilbert and Sobolev function spaces, providing a strong mathematical foundation to the FE method in comparison to other methods. One shall also note that the strong solution always satisfies the weak formulation.

The weak form is obtained by multiplying the previous strong form by an arbitrary function \(v\) called test function, and integrating this expression over the domain \(\Omega\). Generally speaking, this leads to find \(u \in X(\Omega)\) such that

where \(a\) and \(f\) are a bilinear and a linear form, respectively, and with \(X(\Omega)\) and \(Y(\Omega)\) are two function spaces to be determined.

The weak formulation of our previous Poisson problem then reads:

In some sense, this is ‘easier’ to satisfy than the original equation, because it is possible that \(\int \limits_0^1 f \: \text{dx} = 0\) even though \(f \neq 0\) at every point. However, it is possible to show that, roughly speaking, if the integral equation is true for every function \(v\), then the original equation must be true as well, so requiring the latter equation to hold for all \(v\) gives us something equivalent to the original equation.

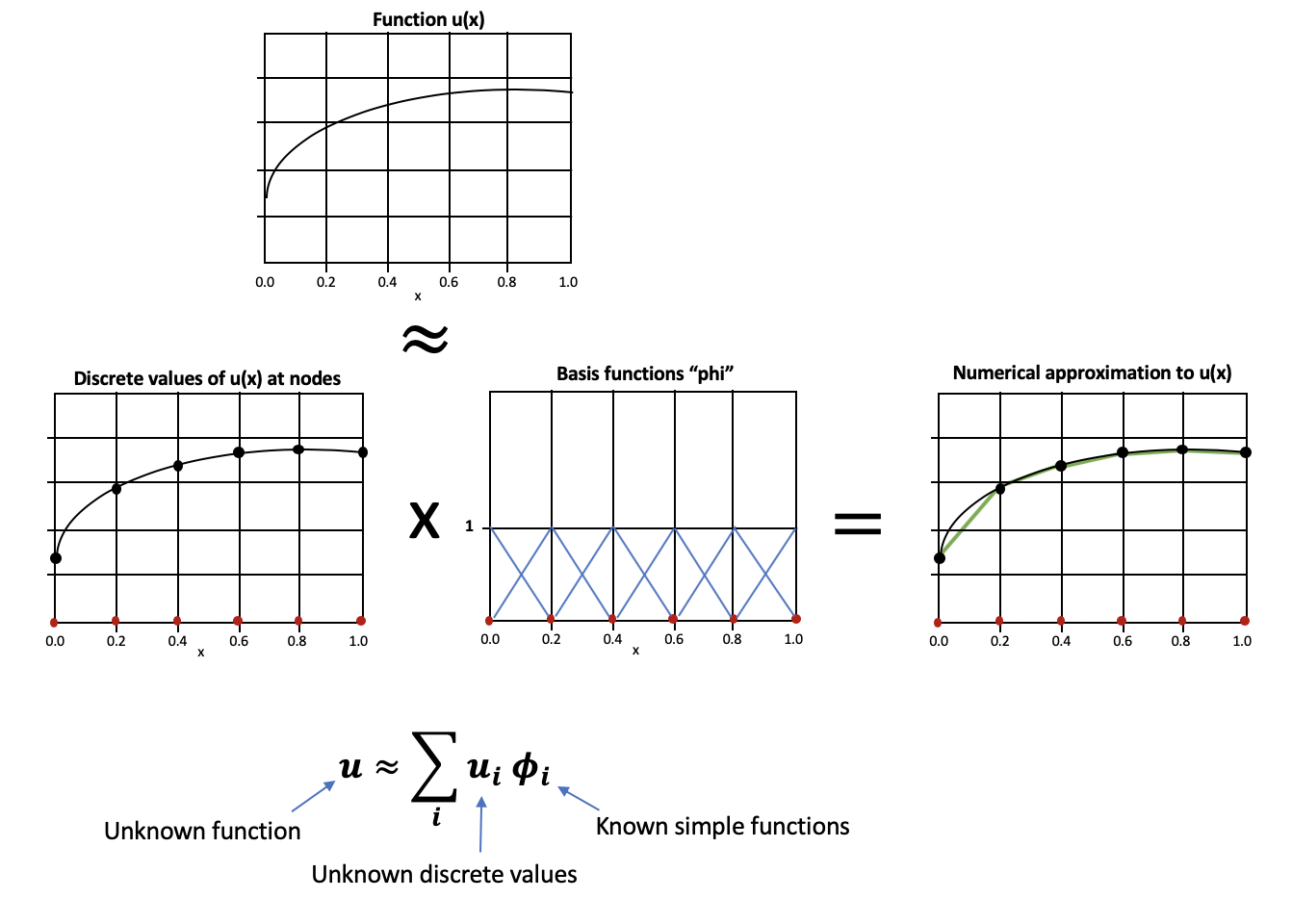

Step 3 - The weak solution#

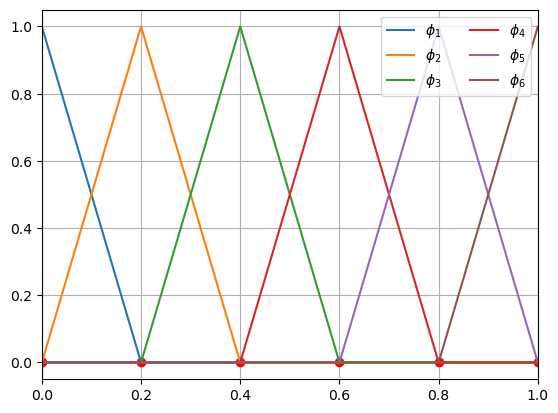

The previous weak formulation supposes the knowledge of the function spaces \(X(\Omega)\) (and \(Y(\Omega)\), but we focus on the case \(X(\Omega)\) = \(Y(\Omega)\)). The FE method is a way of approximating the continuous space \(X(\Omega)\) as a discrete space \(X_{\mathcal{N}}(\Omega)\). The approximation \(u_\mathcal{N} \in X_{\mathcal{N}}(\Omega)\) (discrete) of our strong solution \(u \in X(\Omega)\) (continuous) is defined as a linear combination of basis functions \(\{ \phi_i \}_{i=1}^{\mathcal{N}}\) such that :

The basis functions defining this function space are defined from the type of finite element.

Ciarlet defines a finite element as a tuple \((K, P_K, \Sigma_K)\) composed of :

A geometrical domain \(K\) i.e. simplex or hypercube

A primal space \(P_K\) as a polynomial space of finite dimension where the basis function \(\{ \phi_i \}_{i=1}^{\mathcal{N}}\) live

A dual space \(\Sigma_K\) as a set of linear functionals \(\{ \sigma_i : P_K \rightarrow \mathbb{R} \}\) called degrees of freedom. These degrees of freedom as defined such that :

The Lagrange finite elements (of order \(k\)) are \(H_1\) conforming elements, i.e. suitable for variational problems with solution living in a \(H_1\) Hilbert space. These elements are the mostly used type of FE, defining the degrees of freedom \(\sigma_i\) to be the evaluation of the polynomials at interpolation points :

The number of degrees of freedom per mesh cell i.e. per element depends on the polynomial degree \(k\)

A large variety of finite elements and their basis functions are shown at DefElement, a finite element encyclopedia by Matthew W. Scroggs.

Back to our Poisson problem defined on the interval \(\Omega = [0,1]\) with \(N=6\) points, we can plot the lowest order (P1) basis functions \(\{ \phi_i \}_{i=1}^6\) :

%matplotlib inline

import numpy as np

import matplotlib.pyplot as plt

N = 6

x = np.linspace(0, 1, N)

def phi(i):

y = np.zeros(N)

y[i] = 1.

return y

plt.plot(x, np.zeros_like(x), "ro")

for i in range(N):

plt.plot(x, phi(i), label=rf"$\phi_{i+1}$")

plt.xlim(0, 1)

plt.grid()

plt.legend(ncol=2, loc="upper right");

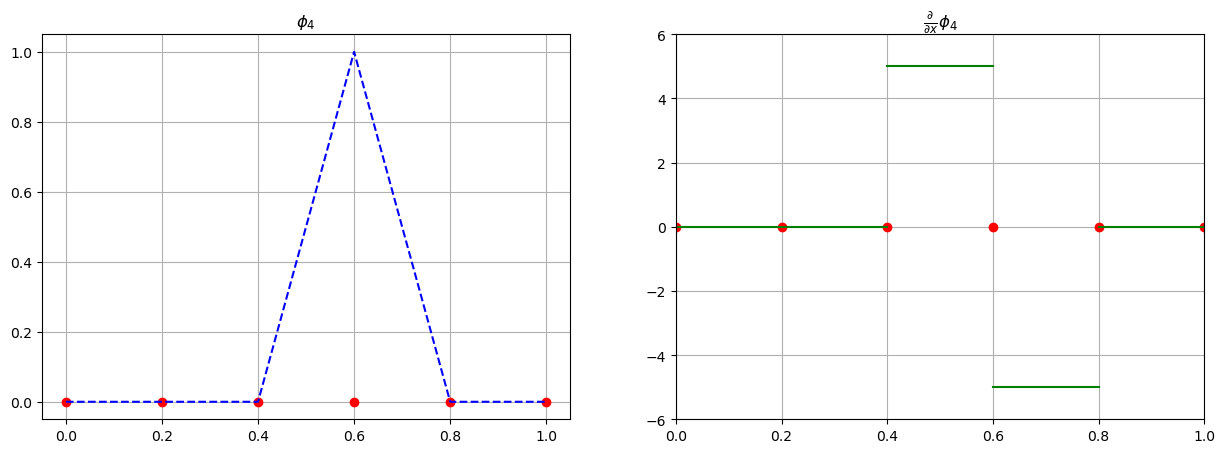

One shall note that the basis functions of our approximation space have discontinuous derivative in space as shown for \(\phi_3\) in the following plots :

f, (ax1, ax2) = plt.subplots(1, 2, figsize=(15, 5))

i = 3

h = 1/(N-1)

ax1.set_title(rf"$\phi_{i+1}$")

ax1.plot(x, np.zeros(N), "ro")

ax1.plot(x, phi(i), "b--")

ax1.grid()

ax2.set_title(r"$\frac{\partial}{\partial x}$"+rf"$\phi_{i+1}$")

ax2.plot(x, N*[0], "ro")

ax2.grid()

x1, x2 = x[:i], x[(i+1):]

ax2.plot(x1, [0]*x1.shape[0], "g-")

ax2.plot(x2, [0]*x2.shape[0], "g-")

ax2.plot([x[i-1], x[i]], [1/h, 1/h], "g-")

ax2.plot([x[i], x[i+1]], [-1/h, -1/h], "g-")

ax2.set_ylim(-N, N)

ax2.set_xlim(0, 1)

(0.0, 1.0)

Then, the second derivatives \(\phi_{xx}\) are zero everywhere and are infinite on the corresponding mesh point \(x_i\), which becomes an issue when dealing with \(u_{xx} = \sum \limits_{i=1}^\mathcal{N} u_i (\phi_i)_{xx}\). The integration by part together wih the Green’s theorem allow us to rewrite the second derivatives in space in the variational form, which becomes:

Back to our 1D example with \(\Omega = [0,1]\), this weak formulation reads:

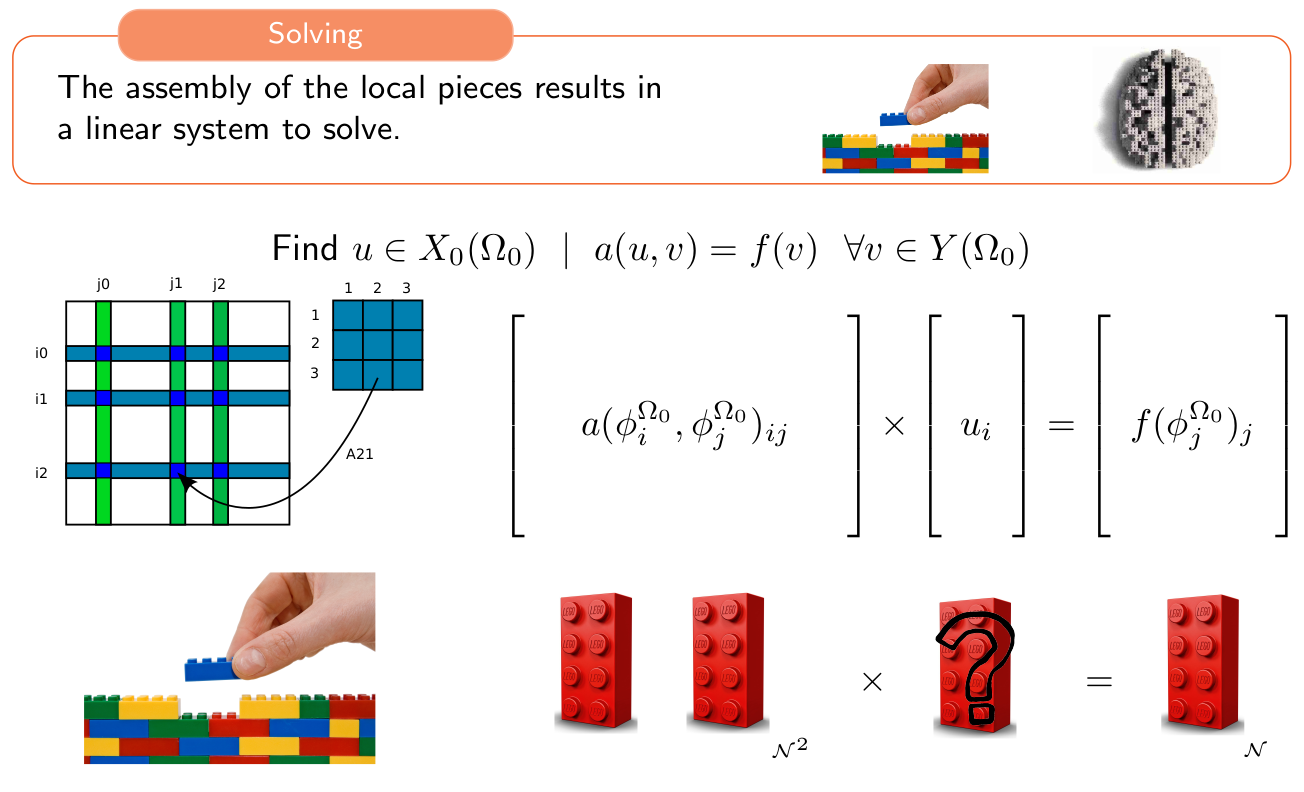

Step 4 - Assembling and solving the system#

Again, the FE method approximates the strong solution \(u\) of our problem as a linear combination of discrete values at the degrees of freedom (interpolation points) multiplied by the corresponding basis functions. The use of lowest order Lagrange FE together with our previous discretization of the 1D domain \(\Omega = [0,1]\) can be illustrated as follows :

The discretized weak formulation \(a(u_{\mathcal N},v_{\mathcal N}) = f(v_{\mathcal N})\) previously introduced can be written as a system

using the definition of \(u_{\mathcal N} \in X_{\mathcal N}(\Omega)\) as \(u_{\mathcal N} = \sum \limits_{i=1}^{\mathcal N} u_i \phi_i\) and the discrete test functions \(v_{\mathcal N} \in X_{\mathcal N}(\Omega)\) as \(v_{\mathcal N} = \sum \limits_{j=1}^{\mathcal N} v_j \phi_j\).

Indeed, we can write

and

Then, finding \(u_{\mathcal N} \in X_{\mathcal N}(\Omega)\) such that \(a(u_{\mathcal N}, v_{\mathcal N}) = f(v_{\mathcal N}) ~\forall v \in X_{\mathcal N}(\Omega)\) can be rewritten as :

Find \(u_{\mathcal N} = [u_1, \dots, u_{\mathcal N}]\) such that :

Such a system is assembled element by element. For each entity \(K\) of the mesh we compute the corresponding local tensor \(\mathcal A^K_{\mathcal N}\), to be added to the global tensor \(\mathcal A_\mathcal{N}\).

Back to our 1D Poisson problem on \(\Omega = [0,1]\), each entity of the mesh is an interval \([x_i, x_{i+1}]\) with \(1 \leqslant i < \mathcal N\). The corresponding local tensor is:

Each of these local tensor is added to the global matrix \(\mathcal A_{\mathcal N}\).

Last but not least, the problem needs boundary conditions to be well posed. In the case of our Poisson problem, we imposed \(u(0) = 0\) and \(u(1) = 1\). These type of boundary conditions known as Dirichlet boundary conditions are imposed directly in the system, replacing the correspondings rows and giving

Note that this system is sparse in the sense that the equation you get for \(v=\phi_i\) only has 3 unknowns: \(u_{i-1}, u_i\) and \(u_{i+1}\), and that this would be true even if \(N\) was a much larger number than 6. This sparsity comes from the fact that the \(\phi_i\) are very ‘local’ functions. Though we will not go into details here, this locality is really what makes the finite element method so powerful, as a sparse system of equations is a lot easier to solve numerically than a dense one.

We can then use any of your favorite linear solver to solve this system and find our approximation \(u_\mathcal N = [u_1, \dots, u_{\mathcal N}]\) :

H = 1/h

A = np.array(([

[1, 0, 0, 0, 0, 0],

[-H, 2*H, -H, 0, 0, 0],

[0, -H, 2*H, -H, 0, 0],

[0, 0, -H, 2*H, -H, 0],

[0, 0, 0, -H, 2*H, -H],

[0, 0, 0, 0, 0, 1],

]))

b = np.array([0, 0, 0, 0, 0, 1])

u = np.linalg.solve(A, b)

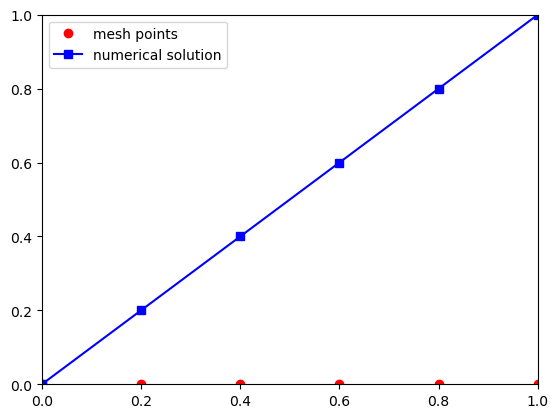

print(f"{u=}")

u=array([-8.8817842e-16, 2.0000000e-01, 4.0000000e-01, 6.0000000e-01,

8.0000000e-01, 1.0000000e+00])

From the definition of \(u_{\mathcal N}\) as a linear combination of the basis functions \(\{ \phi_i \}_{i=1}^N\), it can easily be plotted, showing we get the expected solution :

U = np.zeros(N)

for i in range(N):

U += u[i]*phi(i)

plt.plot(x, [0]*N, "ro", label="mesh points")

plt.plot(x, U, "-bs", label="numerical solution")

plt.xlim(0, 1)

plt.ylim(0, 1)

plt.legend()

<matplotlib.legend.Legend at 0x7fd52821feb0>

We see that in this case we get a very nice and linear solution \(u(x) = 1-x\). Because \(u_{xx}=0\) and the boundary conditions are satisfied, this is actually the real solution of the equation.

Summary#

The Finite Element Method can also be used to solve much more complicated PDEs, using more sophisticated basis functions \(\phi_i\), on more complicated domains \(\Omega\), etc… without significant deviations from the above procedure. The next part will give examples of the method applied to more exciting equations and demonstrate how to use FEniCS, a popular open-source computing platform for solving partial differential equations.

Exercises#

Exercise 1#

Repeat the procedure outlined here for the equation \(u_{xx} + u = 0\). If you want, let the number of mesh points \(n\) be arbitrary, otherwise just use 5. What does the system of equations look like?

Add your answer here

Exercise 2#

The boundary condition \(u=0\) at \(x=0\) corresponds to fixing the temperature of the rod at the end \(x=0\). What do you think the condition \(u_x = 0\) would represent? Can it be incorporated into the system of equations somehow?

Add your answer here

Exercise 3#

If we instead of having one equation with one unknown function had two equations with two unknown functions, could you still apply the finite element method? How?

Add your answer here

Solutions#

Exercise 1#

The weak form is \(\int u_x v_x + u v dx\). Letting the matrix of the system be \((a_{ij})_{ij}\), we again see that when \(|i - j | > 1\), \(a_{ij} = 0\), so our matrix is (again) tridiagonal.

Exercise 2#

This corresponds to coating an end of the rod in isolating material, meaning that no heat is allowed to flow out of the rod. It can be incorporated into the system of equations by modifying the boundary terms \(-u_x(0) v(0) + u_x(1)v(0)\). So if you for example want to enforce \(u_x(0) = 0\), just set the term \(u_x(0)\) to 0.

Exercise 3#

Yes. Usually, if you have two unknowns \(u_1, u_2\) and two equations \(E_1, E_2\), you would introduce two test functions \(v_1, v_2\), and multiply \(E_1\) by \(v_1\), \(E_2\) by \(v_2\) and integrate over the domain, leading to a formulation : Find $\((u_1, u_2) \in X_1(\Omega) \times X_2(\Omega)\)\( such that : \)\( a( (u_1, u_2), (v_1, v_2) ) = f(v_1, v_2) ~\forall (v_1, v_2) \in X_1(\Omega) \times X_2(\Omega)\)$

As before, we use \(u_1 = \sum_i u_{i,1} \phi_{i,1}\) and \(u_2 = \sum_i u_{i,2} \phi_{i,2}\). Note that the function spaces \(X_1(\Omega)\) and \(X_2(\Omega)\) are not necessarily equal.